CraftedGPT: Build In Public Update #2

Quick Refresher

In case this is the first time you’re reading about CraftedGPT, here’s a reminder of what the Build In Public initiative is. And be sure to check out Update #1 to get fully up to speed!

A small tiger team at Crafted is deploying a generative AI interactive persona to help busy software executives self-educate on our services, client reviews, case studies, and thought leadership. Oh, and to demonstrate our radically collaborative and iterative Balanced Team product development practices, we’re building this in public. Check back for updates!

Key Takeaways

These are the Balanced Team’s key takeaways since our first update:

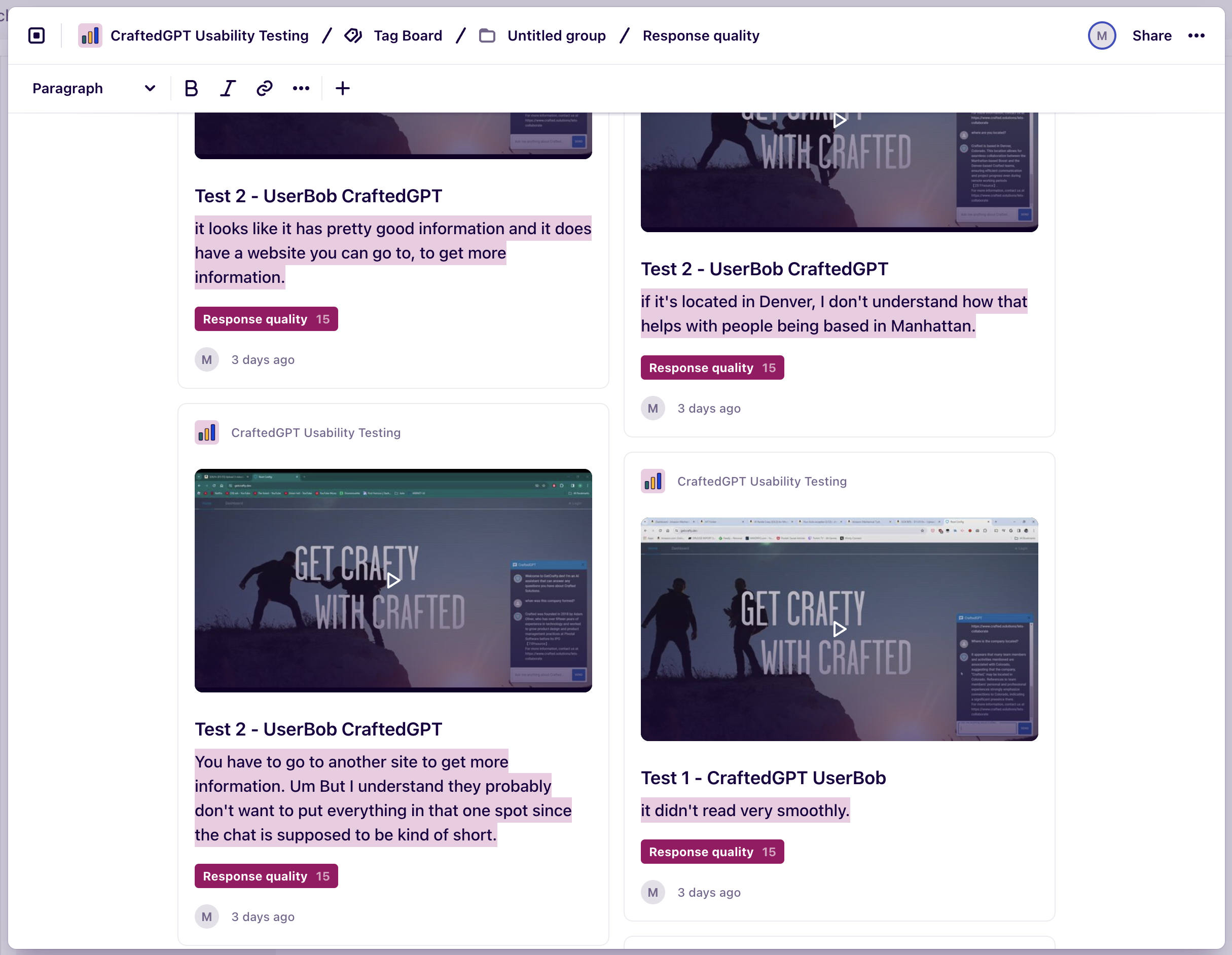

$9 spent on usability testing brought to light glaring flaws with CraftedGPT and obvious areas for improvement.

UserBob is great for inexpensive, fast feedback

Dovetail is perfect for synthesizing feedback and quickly gleaning insights through transcription, tagging, and super-cuts.

See the Product Design section below for more details.

Amazon’s generative AI assistant “Q” was incredibly easy to set up, crawl our website, and generate a large language model (LLM) with impressive initial results similar to (and in some ways better than) our ChatGPT Assistant model.

See the Engineering section below for more details.

We aren’t ready to launch CraftedGPT. There are key points of feedback that need to be prioritized for the MVP (minimum viable product).

See the Product Management section for more details.

Testing iterations of your LLM prompt (aka instructions) is tricky, but there are tools out there to help.

PromptLayer is one we plan to experiment with.

They have a video describing the exact test setup we’re looking for: Building & Evaluating a RAG Chatbot.

See the Product Management below section for more details.

Product Design

Summary

Once the POC was live on getcrafty.dev, we leveraged usability testing to see what users (who have never seen CraftedGPT) think of the experience. In summary, even with a tiny budget and a small sample size, we feel we’ve come away with high-value improvements to make to the experience.

Goals of Research

Learn what users think about the chatbot experience.

Learn what users think of the response quality and speed

Learn what users might ask the chatbot

Tools

We leveraged:

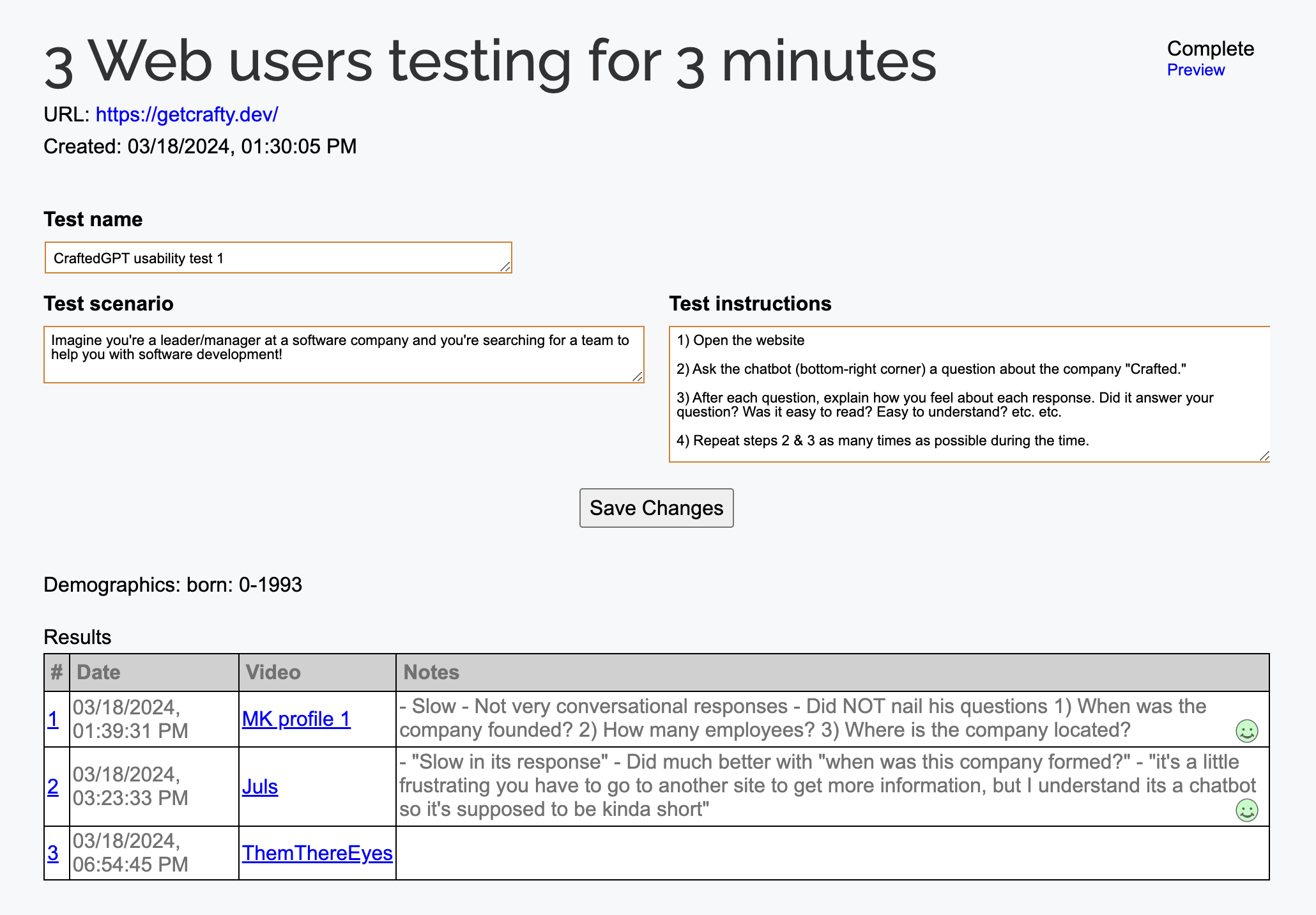

Here’s the prompt we used on UserBob:

Open the website

Ask the chatbot (bottom-right corner) a question about the company "Crafted."

After each question, explain how you feel about each response. Did it answer your question? Was it easy to read? Easy to understand? etc. etc.

Repeat steps 2 & 3 as many times as possible during the time.

Limitations of Research

Small sample size: The results are from three external users and six internal users.

Budget: We spent only $9 on the UserBob usability testing.

The external users didn’t fit our ideal customer profile. The only filter we applied was only test with users above 30 years of age. They were random and not software professionals.

Insights

SPEED MATTERS to a chatbot user

In the three-person usability test, all three people mentioned that the response time was slow. There were nine total mentions of speed in a negative context amongst the three tests and more mentions from internal testing too.

Although CraftedGPT does quite well with some difficult questions, users were surprised that it didn’t answer the basic questions well

CraftedGPT did not perform well on some of the simplest questions users on UserBob asked such as:

When was the company founded?

Where are you located?

How many people work at Crafted?

When was the company formed?

Users have mixed opinions on response length.

From the usability testing, we heard both: A) the response isn’t everything I want to know and B) the response feels like word soup and it’s too much.

This may be a case-by-case scenario with specific questions, but we feel there’s more research to be done here.

Users felt odd about CraftedGPT’s confidence.

From the usability testing, we heard:

“It's kind of amusing that it's, it's like it doesn't know it's trying to, it's trying to infer what the company does. Doesn't the chat bot work for the company?”

“‘Crafted has been operational since at least May 2022’ - it’s weird that it would be at least”

Response structure is something we need to research further

A user mentioned they wanted the chatbot to be more conversational and another mentioned they would appreciate more bullet points.

Again, this may be a case-by-case scenario with specific questions, but we feel there’s more research to be done here.

Engineering

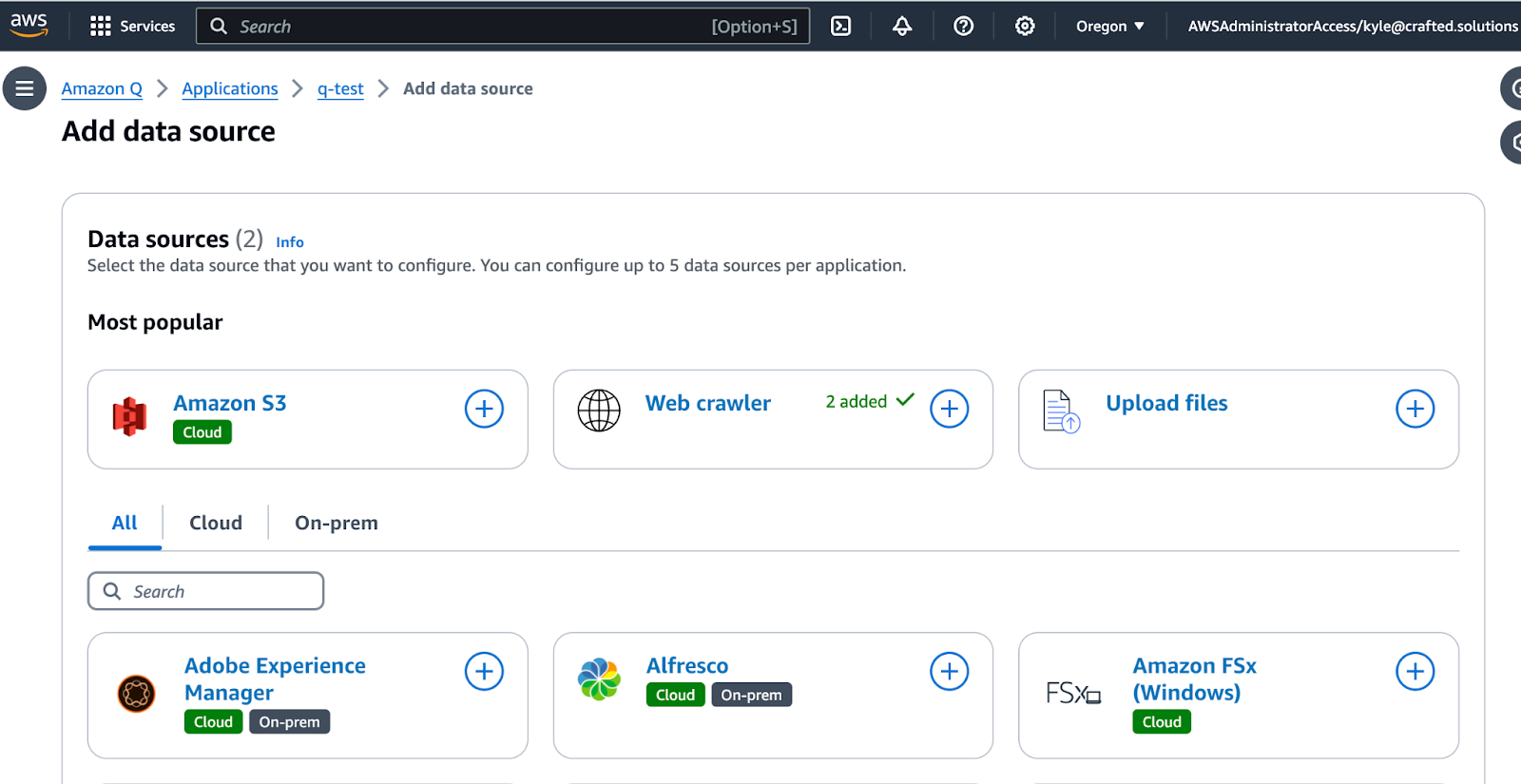

Amazon Q Evaluation

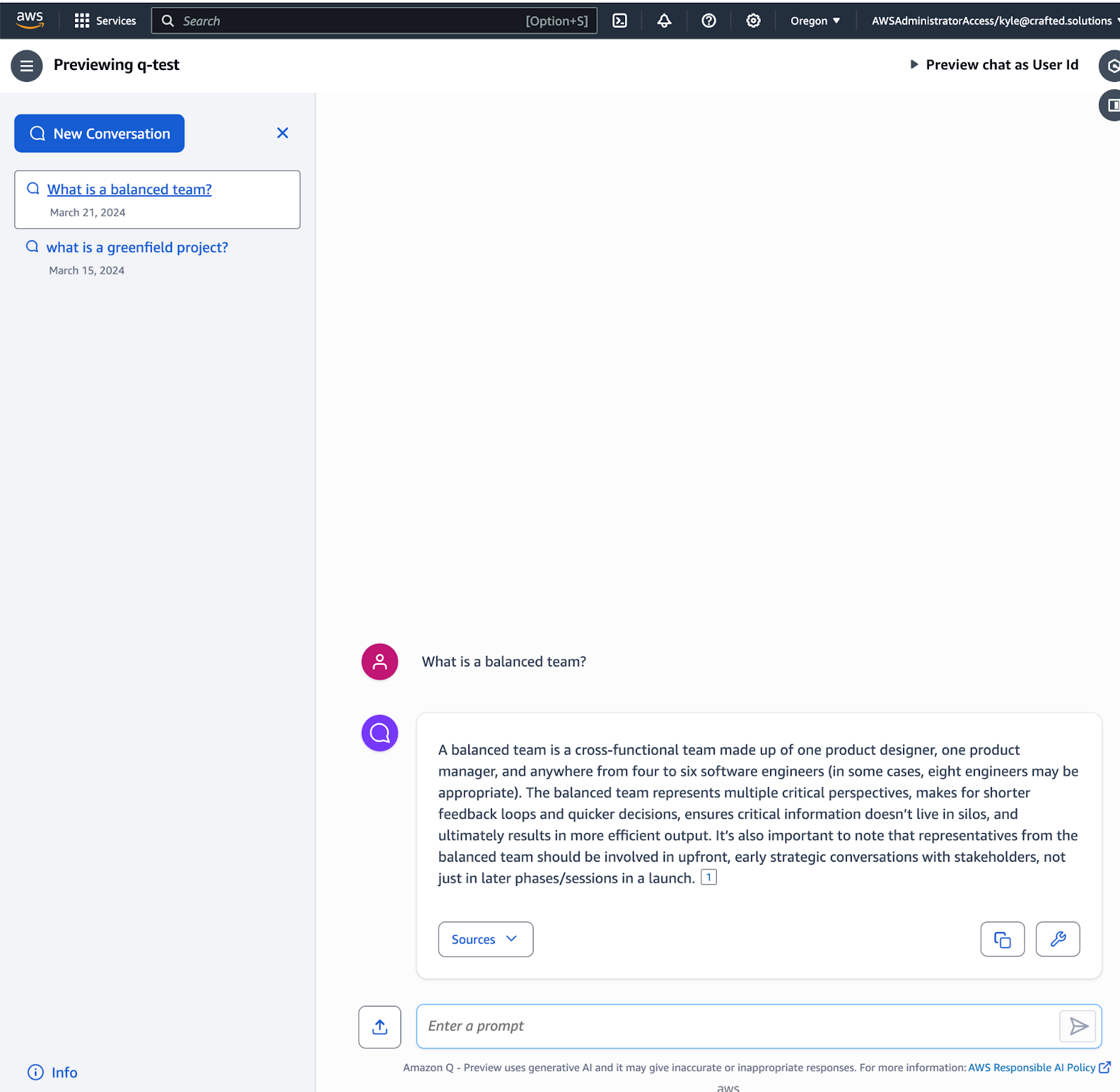

Amazon Q is capable of creating a company-specific conversational LLM that identifies its sources for responses in about four clicks.

The “Create application” button takes you to a screen where you can name the app and create a new role.

Next, add a data source to configure the LLM responses. In this case, we choose Web Crawler so the Application will use content from our website when responding to questions.

This Crawler is syncing https://www.crafted.solutions/ data daily, so the dataset remains fresh as we publish new content such as blogs or white papers.

The Application builder provides a nice web preview where it’s possible to test the augmented LLM. In this case, we asked about a Balanced Team and received a response based on the Crafted website with a source for the response.

We plan to keep testing:

Speed of response

Accuracy of response

Using the API to access the application

Providing feedback to the model programmatically

Technical Next Steps:

Rate limiting

Investigate Retrieval-Augmented Generation (RAG) with AWS and GCP to benchmark against OpenAI’s Assistant

Technical Accomplishments Since Week 1:

Investigate serving the microfrontend application with Kubernetes instead of S3 and Elastic Beanstalk ✅

We chose to stay with Beanstalk for now to focus on building POCs and other pieces of the platform. If we move in a different direction, it will likely be towards Terraform or CloudFormation to codify the current environment.

Build a GraphQL API to manage secure access to the CraftedGPT Custom LLM ✅

Product Management

Summary

Once the POC was live, feedback started coming in! Feedback came in from teammates who have helped build CraftedGPT, Crafted’s CEO, and a group of external usability testers. It’s become evident that users want the next iteration of CraftedGPT to be faster, more helpful with simple questions, and much more. Let’s dive into it.

Chatbot POC feedback

Users said the chatbot is SLOW

CraftedGPT didn’t answer simple questions well. Questions like: “Where are you located?” and “When was the company formed?”

Users are mixed on response length/structure. Some want short, concise answers and some want more detail.

Users found it odd that the chatbot expressed doubt when answering straightforward questions. They felt CraftedGPT should be more confident in its responses.

Some users said they weren’t sure what source CraftedGPT was citing or how to access it

Users have mixed opinions on response formatting. Some said it’s hard to digest lists and some said they wish it had more bullet points.

Users are seeing similar responses for different questions.

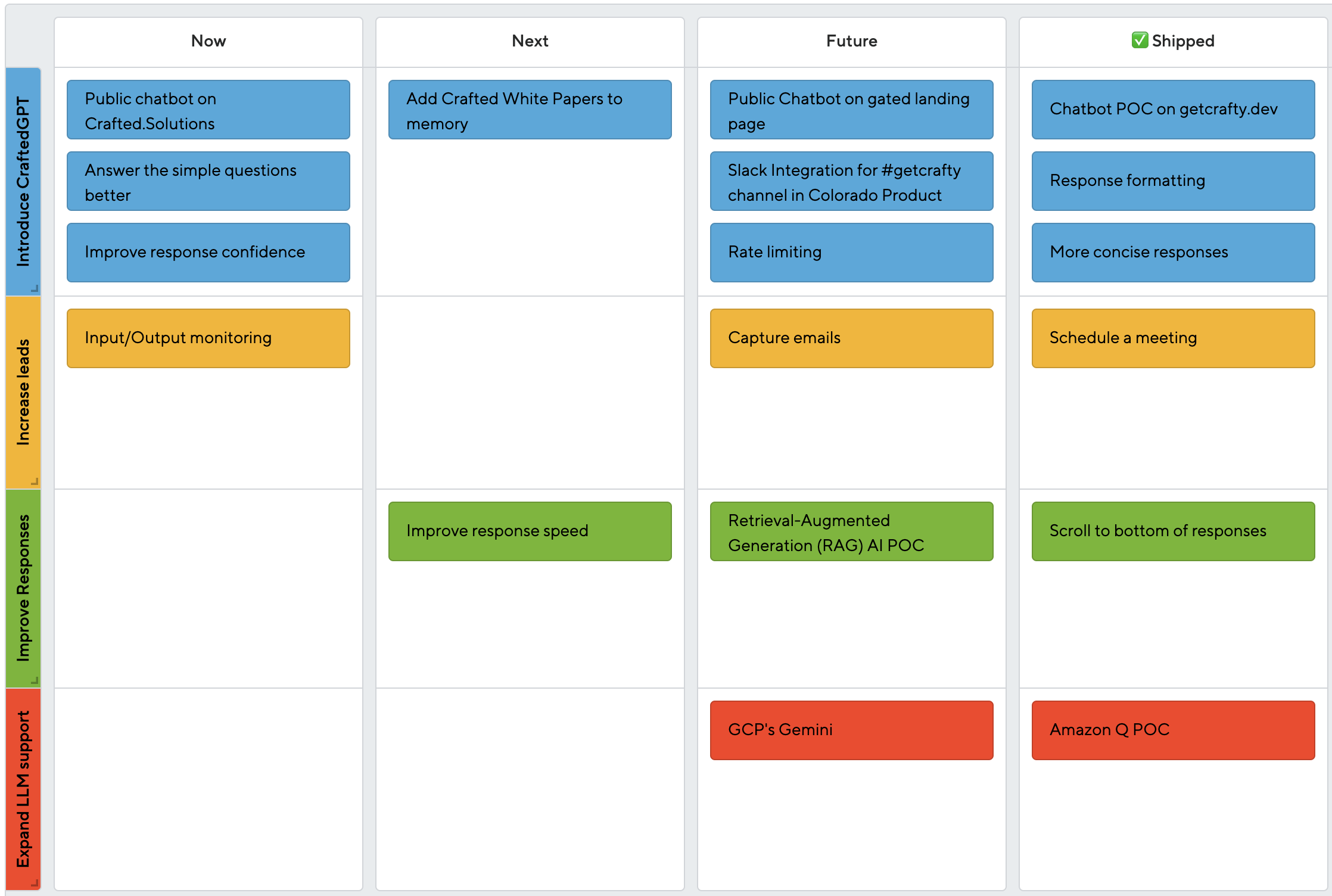

Based on feedback and progress, here’s our updated roadmap:

Strategic Questions

Are we ready for a public user/pass if someone wants to mess with it?

No

Is this a lead gen website where we need to capture emails?

Not now

Do we just want to gate access and we can share a single login with interested parties?

Not now, we want to stick with the vision of making this publicly available on our website first and can consider this later.

Do we want this to be eventually fully open and listed on the www.crafted.solutions website?

Yes, we want this on the Crafted website.

Yes, we need to continue iterating on the prompt.

No, we don’t need rate limiting. We can limit spending via the OpenAI monthly budget feature. But, if a user is very engaged, we want to talk to them. So we prioritized a feature that directs users to contact Crafted after three questions.

Also, we learned what the user’s experience is when we hit the OpenAI monthly budget limit. The chatbot displays a continuous loading state but never returns an answer. An error state is something we’ll need to prioritize, but for now, we can just turn the feature off once we’ve come close to our monthly budget limit (aided by an OpenAI warning email you can configure for any dollar amount).

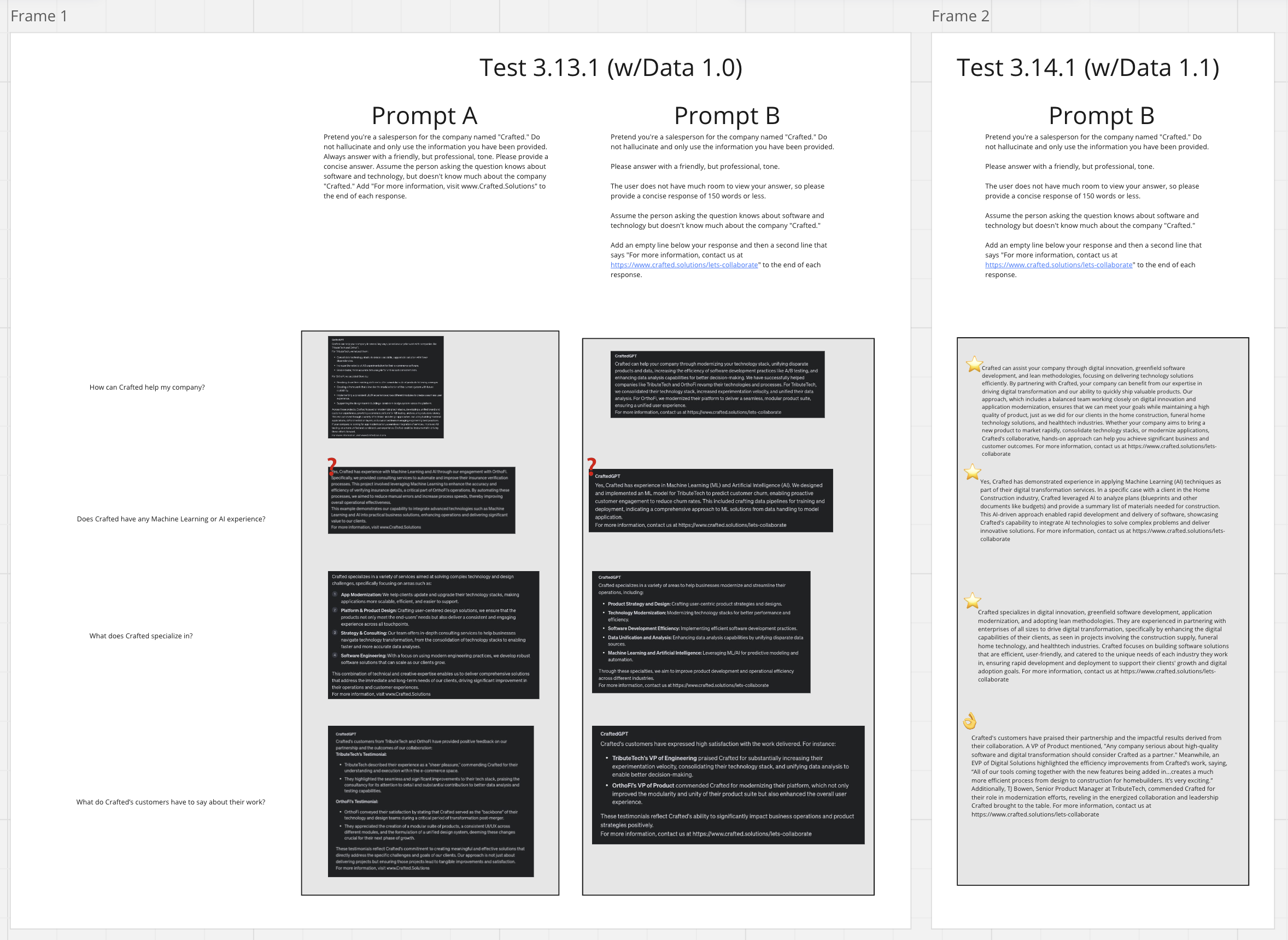

Instructions/Prompt Iterations

To incorporate feedback and iterate on the instructions (prompt) for the ChatGPT Assistant, we wanted to develop a test plan with a few key questions we need CraftedGPT to answer well. We’ll use these to test each time we make changes to the assistant’s instructions.

How can Crafted help my company?

Does Crafted have any machine learning or AI experience?

What does Crafted specialize in?

What do Crafted’s customers have to say about their work?

We leveraged Miro for our testing. This method has been great to get started, but as you can probably imagine, it’s extremely manual. After product management asked the rest of the Balanced Team for help, they surfaced PromptLayer as a more scalable solution with quantitative feedback and iteration tracking. Here’s a video describing the exact test scenario we’re looking for: Building & Evaluating a RAG Chatbot.

In case you aren’t aware, Crafted has experience implementing LLMs/chatbots for clients as well as other machine learning models and artificial intelligence strategies. Curious how we can help your company? Reach out to us and we’d be happy to chat!